DeepStream 8 Debuts: Powering Real-Time Vision at the New Era of Jetson Thor

Published on September 27, 2025

NVIDIA is accelerating real-time vision AI with the release of DeepStream 8 and the new Jetson Thor. Together, they simplify the creation of vision pipelines while delivering major performance gains at the edge. Both are key components of Metropolis, NVIDIA’s end-to-end platform for edge vision AI. As an official Metropolis partner, Namla extends this ecosystem with cloud-native orchestration and edge management, enabling these innovations to scale securely from prototype to production.

What’s New in DeepStream 8

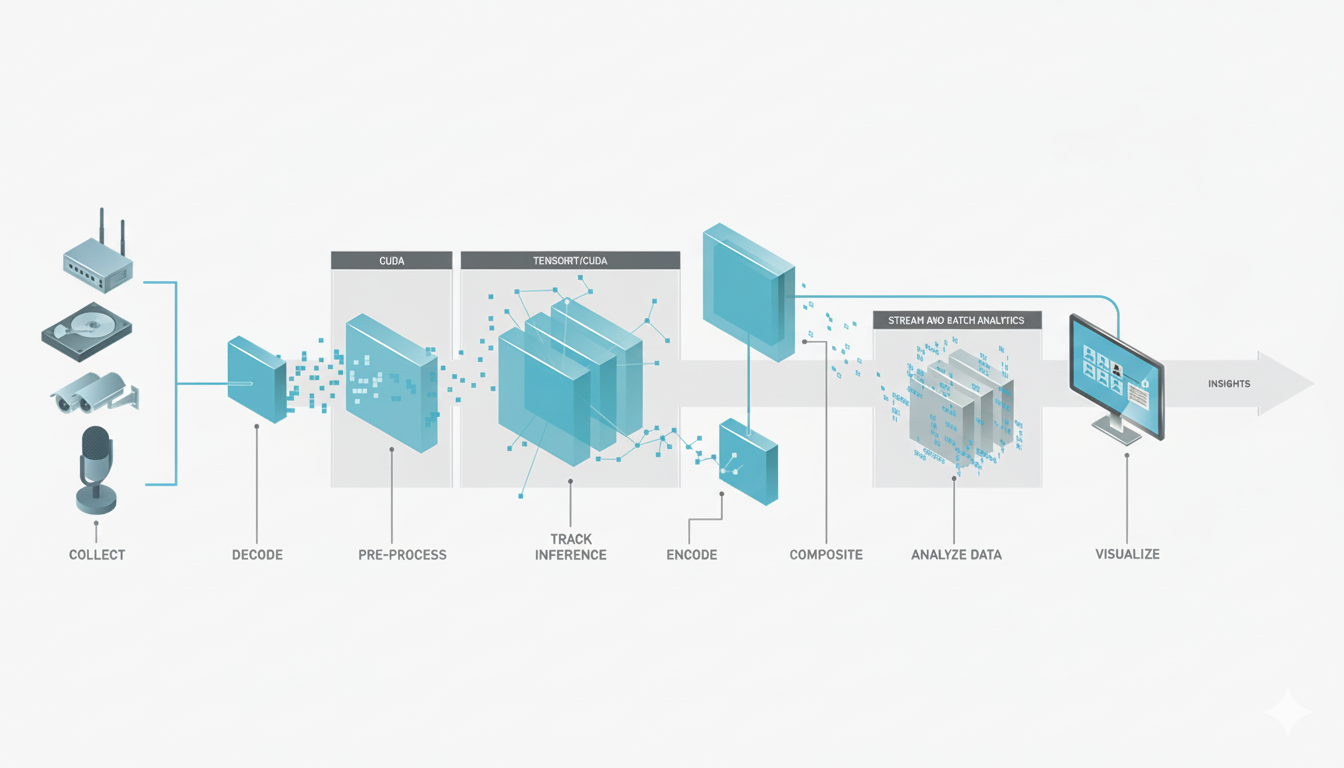

DeepStream 8.0 is a huge step forward for Edge AI over Nvidia Jetson GPUs. DeepStream is NVIDIA’s end-to-end streaming analytics toolkit, enabling real-time video understanding and AI inference at the edge. With the release of version 8.0, it now supports Blackwell GPUs and Jetson Thor, bringing breakthrough performance for next-generation edge AI systems. DeepStream 8.0 also integrates with Triton Inference Server (25.03 on x86 and 25.08 on Jetson) and leverages JetPack 7.0 (r38.2 BSP) for Jetson-based deployments. Enhancements such as Pyservicemaker with new APIs for pipeline configuration and activation further simplify application development, making DeepStream a cornerstone of Nvidia’s Edge AI stack especially for Metropolis Microservices for Jetson (MMJ) alongside NVIDIA Thor.

DeepStream 8 introduces the Inference Builder, letting developers create pipelines from a config file instead of coding long GStreamer scripts.

Key features include:

Simple config → full inference pipeline.

Multi-backend support (DeepStream, Triton, TensorRT).

Advanced vision functions like multi-camera 3D tracking and pixel-level MaskTracker.

Easy integration with models trained using the TAO Toolkit.

Result: faster development, less boilerplate, and quick deployment in Docker containers.

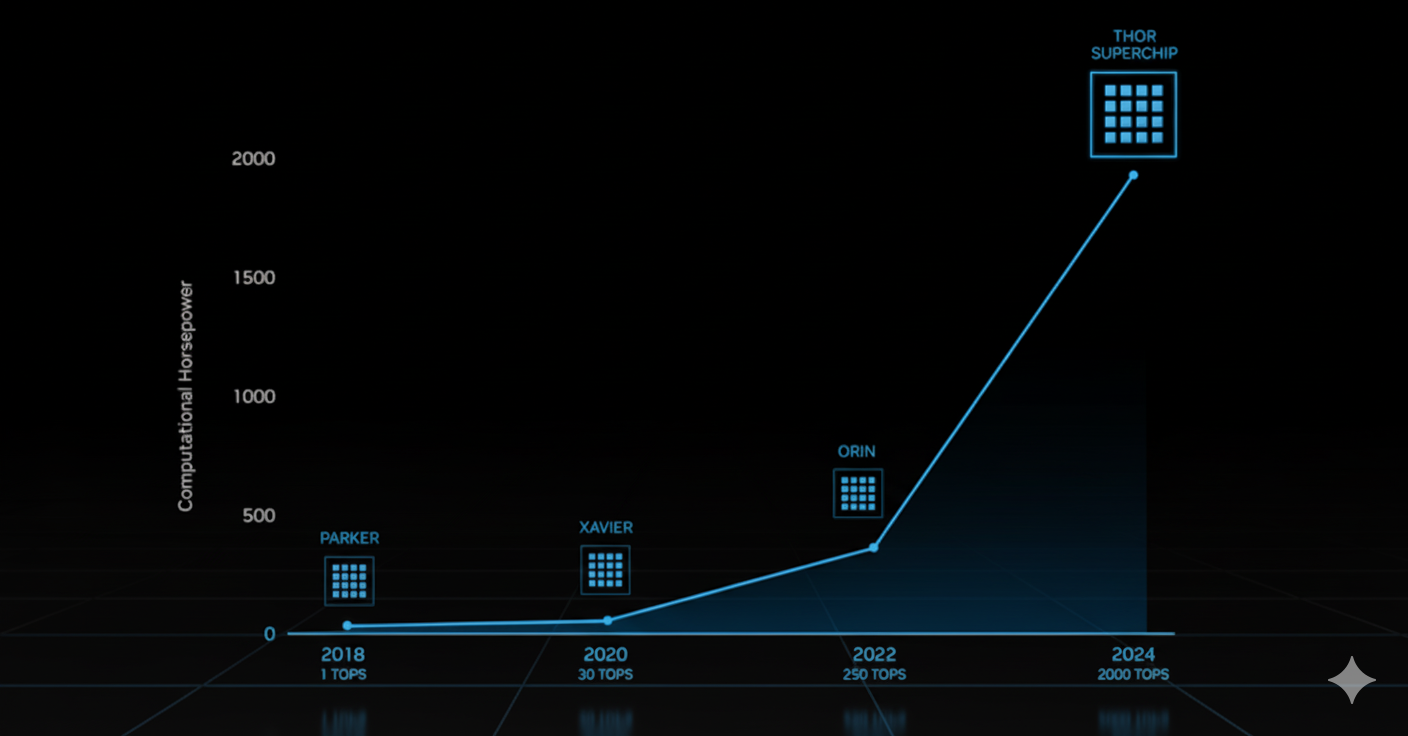

Jetson Thor: More Power at the Edge

The Jetson AGX Thor series delivers a massive leap in edge AI compute, offering up to 7.5× the performance of Jetson Orin. Powered by the new Blackwell GPU architecture, Thor is optimized for FP4 and FP8 inference, enabling advanced Edge AI workloads like multi-camera perception, sensor fusion, robotics, and even generative AI models directly at the edge. With tight integration into NVIDIA’s Isaac, Metropolis, and Holoscan stacks, Jetson Thor is designed to run larger, more complex, and multi-modal Edge AI systems without relying on the cloud.

Namla & NVIDIA Metropolis: Scaling Edge AI with Cloud-Native Orchestration

As an official NVIDIA Metropolis partner, Namla brings cloud‑native orchestration and edge management to the ecosystem. This enables Metropolis microservices to run securely and at scale on a Kubernetes‑native infrastructure, seamlessly connected from the cloud to the edge through the SD‑WAN network.

At the core of this partnership is Metropolis Microservices for Jetson (MMJ), NVIDIA’s new platform. It simplifies the development, deployment, and management of edge AI applications on Jetson. MMJ makes DeepStream the central building block, powering streaming analytics and inference. It integrates components like the Video Storage Toolkit (VST) and new analytics services. Combined with NVIDIA hardware, including Jetson Thor, MMJ establishes a new foundation for building scalable, production-grade AI at the edge.

Namla extends MMJ with advanced orchestration and life-cycle management capabilities. These address the needs of production environments:

Cloud-native SD-WAN integration for secure cloud-to-edge connectivity

Kubernetes-native manifests and Helm deployments to scale MMJ microservices

GPU and system monitoring using NVIDIA DCGM and Jetson state metrics

Remote access to Jetson Thor, Orin, and other Jetson devices for life-cycle management

Centralized container service logging for troubleshooting and faster issue resolution

NVIDIA Metropolis and Namla empower developers to move from prototype to production faster, securely, and at scale.

Read more

Deploy AI on Nvidia Jetson with Namla & Edge Impulse

July 29, 2024