Namla to Scale Edge AI with NVIDIA Jetson and NVIDIA Metropolis Platforms

Published on May 28, 2024

Ready for a demo of

Namla

?

Namla, a provider of edge orchestration & management solutions, has announced it is collaborating with NVIDIA to enhance the scalability of edge AI operations for companies.

Namla offers an integrated platform for deploying edge AI infrastructure, managing devices, and orchestrating applications while ensuring edge-to-cloud connectivity and security. This collaboration with NVIDIA will help enable businesses in various sectors, such as retail, manufacturing, healthcare, oil & gas, and video analytics, to scale up their deployment of NVIDIA Jetson systems-on-module and streamline the implementation of edge AI applications using NVIDIA Metropolis microservices.

The collaboration seeks to advance the way companies approach their edge AI projects, helping facilitate seamless scaling to thousands of locations with connectivity, security, and effective application orchestration.

Namla Edge Orchestration & Management

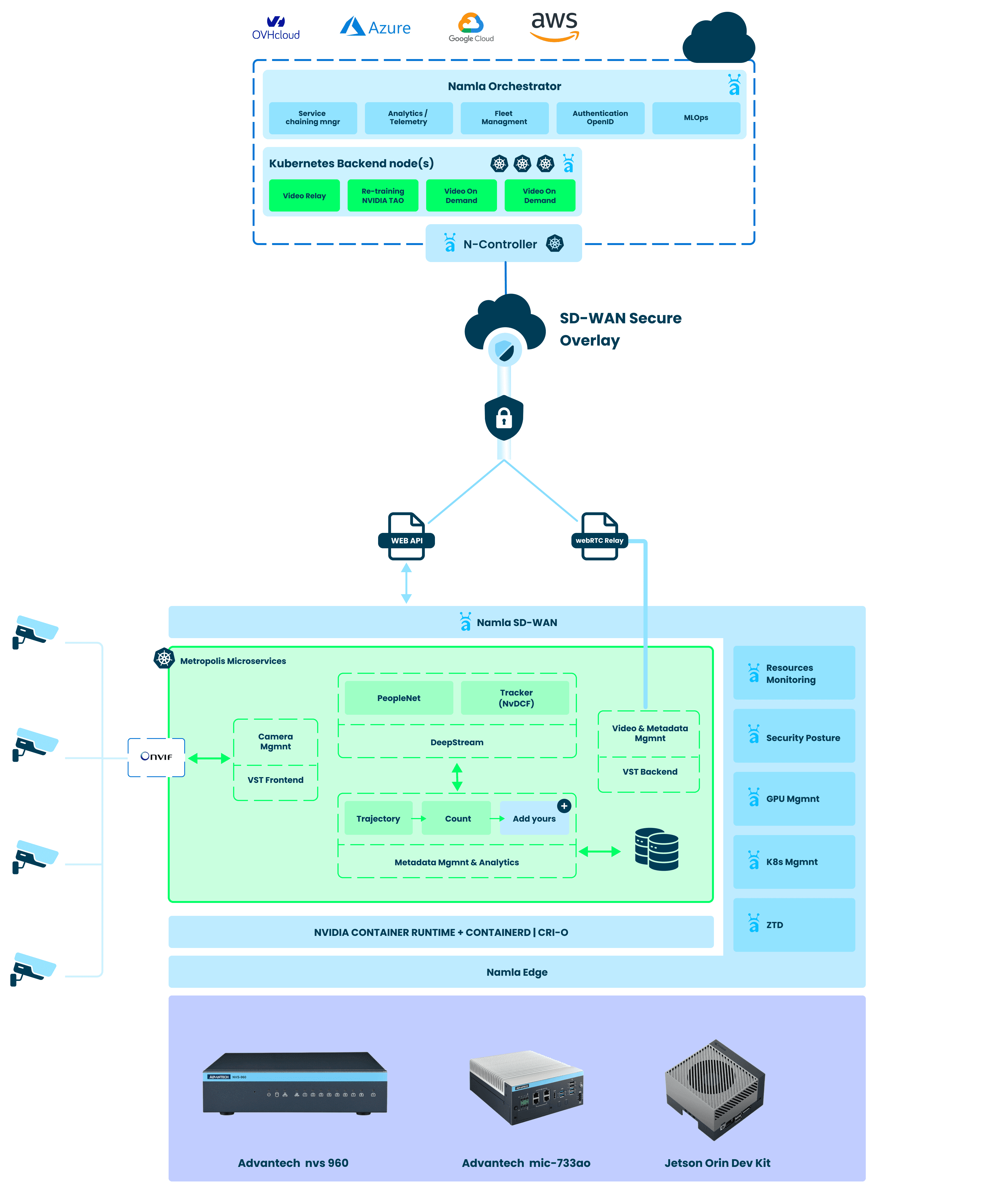

Namla is an advanced edge orchestration and management platform that simplifies the setup and management of large-scale, distributed edge devices. The platform is designed to support the entire lifecycle of distributed edge infrastructure, including device deployment and management, application orchestration, and secure connectivity. Its main objective is to deliver a comprehensive solution that reduces the complexities of managing edge resources while ensuring strong security and seamless integration from the edge to the cloud. Focusing on edge AI, Namla offers a powerful solution for deploying and managing GPU-based devices, and efficiently orchestrating AI applications across thousands of locations.

Powered by NVIDIA Metropolis

NVIDIA Metropolis microservices microservices offer robust, adaptable, and cloud-native components for crafting vision AI applications and solutions, optimized to operate on NVIDIA cloud and data center GPUs, as well as on the NVIDIA Jetson Orin™ edge AI platform. These microservices empower companies to extract valuable insights across various environments, from retail and warehouse settings to airports and roadways, through the use of reference applications that tackle a broad spectrum of vision AI challenges such as multi-camera tracking, occupancy heatmaps, AI-driven NVRs, and beyond.The microservices streamline the process of prototyping, constructing, evaluating, and scaling applications from the cloud to the edge, delivering greater efficiency, resilience, and security. Furthermore, with NVIDIA microservices, developers can integrate the latest generative AI technologies at the edge with straightforward API calls, enhancing the capabilities and performance of their solutions.

Scaling Edge AI with Namla

The goal of Namla’s collaboration with NVIDIA is to assist organizations in scaling their edge AI operations. Through this collaboration, companies and public agencies can leverage a complete edge AI solution that utilizes NVIDIA Jetson and NVIDIA Metropolis for building edge AI applications, all managed and deployed efficiently using Namla’s Orchestration and Management platform. Key advantages of integrating Namla with NVIDIA Jetson and Metropolis include:

- Streamline the deployment & management of NVIDIA edge AI infrastructure

By leveraging the strengths of Namla’s Zero-Touch Deployment (ZTD), companies can now efficiently deploy thousands of NVIDIA Jetson edge AI devices. Namla’s ZTD feature eliminates the need for onsite configurations, enabling the provisioning of NVIDIA Jetson modules in just 15 minutes by simply connecting the device to power and the internet.

Additionally, Namla offers extensive observability and monitoring capabilities, providing real-time insights into every element of the infrastructure, from hardware components such as CPU, GPU, and memory to networking, along with comprehensive application monitoring. This ensures a 360° view of the entire infrastructure, facilitating the rapid identification and resolution of potential issues, thereby minimizing downtime. Furthermore, Namla offers secure remote capabilities that allow swift interventions and operations on each device or application.

- Edge-to-Cloud connectivity & security

Namla has developed the first cloud-native SD-WAN solution designed to operate on NVIDIA Jetson modules, allowing companies to benefit from a fully integrated edge architecture where applications and connectivity are closely intertwined and reside on the same hardware. This integration leads to reduced CAPEX and lower operational costs. Additionally, Namla’s cloud-native SD-WAN solution creates a secure overlay between the edge and the cloud, ensuring reliable connectivity and maintaining network performance and availability, which are essential for mission-critical or customer-facing applications.

- Cloud-native orchestration of NVIDIA Metropolis

Namla establishes a Kubernetes cluster that extends from the cloud to the edge, providing organizations with a cloud-native environment directly at the edge where they can effortlessly deploy container-based applications. NVIDIA Metropolis offers a comprehensive stack for building robust end-to-end AI applications using cloud-native microservices. NVIDIA has expanded these microservices to support deployment on NVIDIA Jetson, which incorporates additional components suited for edge environments. Namla’s integration of these microservices helps enable companies to efficiently deploy the full Metropolis stack across thousands of Jetson modules while leveraging powerful Kubernetes orchestration capabilities, facilitating the development and deployment of reliable edge AI applications.

Namla’s collaboration with NVIDIA equips organizations with a powerful toolset to efficiently navigate the complexities of edge AI deployment from initial concept through to full-scale production, supporting continuous innovation and growth in the dynamic landscape of edge AI.

The integration simplifies the process of configuring, managing, and updating a vast network of edge AI devices, making it easier for companies and public agencies to expand their AI infrastructure as their needs grow. This scalability is crucial for adapting to evolving market demands and technological advancements. Additionally, the integration helps ensure that organizations can maintain high levels of performance, security, and reliability across their edge AI applications, even as they increase the number of deployed devices.

Moreover, Namla with NVIDIA Metropolis accelerates the time to market for new applications and services, helping businesses quickly capitalize on new opportunities. With comprehensive monitoring and management tools, organizations gain 360° visibility over their edge AI ecosystem, facilitating proactive maintenance and optimization.